4 Reinventing Exam Question Assessments in the Age of AI to Reflect Diverse Voices and Promote Critical Thinking

Kelly Soczka Steidinger, M.A.

- Incorporate an assessment into course curriculum that uses artificially intelligent large language models to increase students’ technological proficiency and literacy.

- Implement an assessment that promotes students’ awareness of and sensitivity to diverse perspectives and experiences.

- Identify the value of student-constructed content by utilizing renewable and openly licensed assessments to benefit the broader educational community.

Chapter Overview

This chapter explores how undergraduate students use generative artificially intelligent (AI) models, such as Google’s Gemini, ChatGPT, and Claude, to formulate scenario-based multiple-choice questions with a critical perspective. Since artificially intelligent large language models (LLMs) are infused with inherent human bias, hallucinations, and occasional structural flaws, I created an assessment that asks students to critically review and revise AI-generated questions to ensure they accurately reflected current course content and authentically represented their perspectives. To promote deep learning, students justify the accuracy of the correct answers and rank the plausibility of incorrect answers, citing peer-reviewed course materials to support their reasoning. Additionally, students add authenticity and humanize questions by creatively revising the contextual details of the questions with a focus on diversity, equity, and inclusion (DEI). The newly revised student multiple-choice questions are compiled and amalgamated into digital learning objects for the future use of students and openly licensed for public consumption as an open pedagogy project.

This chapter introduces the AI multiple-choice summative assessment that familiarizes students with LLMs, enhances the understanding of course content, and promotes inclusive exam design. Next, the chapter covers the academic benefits of the assessment, offers guidance on adoption into the course curriculum, and strategies for reusing revised questions in openly licensed digital resources for future students or the public. The chapter concludes with an overview of the assessment results, limitations, and alternative applications.

Focused Questions:

- How can students use AI to advance their critical-analytical thinking skills?

- How can you revise staple assessments in your academic discipline to enable AI skill-building while continuing to develop learners’ higher-order thinking skills?

- How can you leverage AI tools to enhance student learning and develop AI literacy skills?

- How can you reinvent assessments to incorporate diversity, equity, and inclusion principles to strengthen student unity and spur creativity?

- How can you integrate renewable assessments in your course curriculum to encourage student engagement and openly license student-constructed content to benefit future students or the community?

Rationale

With the advent of the 2022 public release of generative artificial intelligence, educators have been thrust into a new, continually changing technological landscape that directly impacts student skill development in higher education. According to Zeide (2019), AI is “the attempt to create machines that do things previously only possible through human cognition” (p. 31). Bowen and Watson (2024) also offer that AI “refers to the ability of computer systems to mimic human intelligence” (p. 16). Overnight assessments that were staples of the higher education classroom that assessed student cognition through writing, such as the “term paper,” became obsolete. The rapid development and adoption of generative AI technologies, as highlighted by the 2022 public release, necessitated a shift in how educators approach student skill development in higher education.

While humans have been interacting with AI for years, such as interfacing with predictive text, facial recognition, and GPS navigation systems, the release of “generative” AI in the form of large language models (LLMs) to the public is a novel advancement that impacts numerous industries, including academia (Zeide, 2019). Unlike prior forms of AI, generative LLMs go beyond pattern recognition and can create new content, including computer code, text, images, and videos (Xia et al., 2024). Since LLMs can generate unique ideas, draw conclusions, and find answers, how should instructors modify curriculum, assessments, and classroom practices to ensure students continue to advance their cognition and critical thinking skills? Constructing assessments that stimulate students’ critical thinking will be a new challenge for educators in the 21st century. This chapter provides a solution to the dilemma by instilling students with AI literacy skills.

AI Literacy Framework

The definition of AI literacy continues to mature as technology evolves. At the core of AI literacy is the “understanding and capability to interact effectively with AI technology” (Walter, 2024, p. 11). Moreover, the concept of AI literacy encompasses more than just the technical aspects of interfacing with AI but also includes the recognition of the social and ethical ramifications of AI. A more nuanced approach to understanding AI literacy integration into the curriculum can be better understood when educators consider Ng et al.’s (2021) framework of AI literacy. This framework entails four levels of understanding that align with the cognitive domains of Bloom’s Taxonomy. These levels, from lowest to highest levels of cognition, include:

- Students will “know and understand” how to use basic AI applications and operations.

- Students will “use and apply” AI concepts or skills in various scenarios.

- Students will “evaluate and create” using higher-order thinking skills with generative AI models.

- Students will “evaluate” AI ethics, which encourages students to consider ethical principles such as fairness, equity, accountability, and safety when using AI applications.

The AI multiple-choice assessment detailed in this chapter meets the four levels of Ng et al.’s (2021) framework. When completing the assignment, students use factor one by writing prompts and soliciting multiple-choice questions and responses from AI models. Next, students use their knowledge of AI within the “scenario” or context of exam question construction. The third factor is met when students evaluate and revise questions using Bloom’s higher-order thinking skills, including analysis, evaluation, and critical thinking. The fourth and final level is met when students evaluate and revise questions because they consider social norms and apply inclusive principles.

Since AI doesn’t interact with the external world, humans need to validate the authenticity of the information being generated by AI. One drawback of generative LLMs is the inability to detect human bias or stereotypes that may be present in large data sets from which chatbots pull information and provide it to users (Herder, 2023). AI presents correct and incorrect data equally, without any limitations, which results in marginalized persons and groups being underrepresented in solutions AI offers users (Herder, 2023; Leffer, 2023). Likewise, new research argues that humans may learn, reproduce, and carry this bias into offline interactions (Leffer, 2023). Thus, the need to teach AI literacy and focus on exposing and correcting this bias is imperative.

Recognizing the importance of AI literacy and the need to mitigate bias, I sought to create an assessment that would not only challenge students but also prepare them for the AI-driven future. To achieve this, I center the assessment on constructivist principles and critical thinking, which are skills essential to developing meaningful problem-solving abilities. Constructivism is an educational philosophy that educators use to design learning activities, assessments, and knowledge-acquisition strategies. A key principle of constructivism is learners actively cultivate knowledge for themselves (Schunk, 2020; Simpson, 2002). Moreover, constructivism has influenced instructional delivery and curriculum creation by proposing learners actively engage with course content through “manipulation of materials and social interaction” (Schunk, 2020, p. 316). For instance, when students write AI prompts and revise multiple-choice questions to reflect their perspectives, they manipulate course content, which improves critical thinking and content retention.

Exogenous Perspective of Constructivism

An additional and equally important constructivist principle embedded into the AI multiple-choice questions assessment is the exogenous perspective of constructivism. The exogenous perspective maintains the “assumption that knowledge is derived from one’s environment and, in that sense, can be said to be learned” (Moshman, 1982, p. 373). Further, Moshman (1982) argues that the construction of knowledge is a reconstruction of information, relationships, and observed patterns found in students’ external reality, where they acquire knowledge and skills through experiences, models, and teaching. When revising AI-generated questions, students learn course content by reconstructing their perception of reality by exemplifying human characteristics. Students demonstrate competency in their knowledge and skills through question revision. Additionally, Schunk (2020) adds, “Knowledge is accurate to the extent that it reflects that reality” (p. 316). When learners revise AI multiple-choice questions to focus on representing authentic human diversity, they construct a more accurate reflection of human reality and increase their knowledge about themselves and others.

Employing an assessment that asks students to revise multiple-choice questions through the lens of diversity, equity, and inclusion (DEI) exercises students’ critical thinking. By embracing this assessment approach, students use critical thinking to “analyze questions, evaluate different perspectives, and create reasoned arguments” (Walter, 2024, p. 34). When completing the assessment, students practice:

- “analyzing” and “evaluating” AI-generated questions to identify and replace AI bias, stereotypes, and underrepresentation.

- “creating reasoned arguments” when justifying the accuracy of the AI’s identified correct answers.

- applying critical thinking when ranking the incorrect answer choices and providing “reasoned arguments” for their rankings.

Another method to stimulate critical thinking skills in the AI multiple-choice assessment involves asking students to write logical arguments to validate the correct answers and the incorrect answer ranking to the constructed questions (Harvard, 2020). There are several reasons to require students to identify where they located evidence to justify the questions’ correct answers, including:

- To increase students’ AI literacy skills by highlighting the importance of using peer-reviewed materials to check the accuracy of AI-generated content since LLMs can hallucinate and provide skewed data that could lead to “discriminatory conclusions against underrepresented groups” (Walter, 2024, p. 12).

- To guarantee the newly revised questions contain course concepts and theories that align with the course’s current materials.

- To encourage students to think critically and avoid using AI to generate explanations and answer rationales.

By building logical arguments and revising multiple-choice questions, students use creativity, critical thinking, and AI literacy skills.

Creating Renewable Assessments

The final purpose of the AI multiple-choice question assessment is to create a renewable assignment aligned with Open Pedagogy principles. As Wiley and Hilton (2018) define, renewable assessments “both support an individual student’s learning and result in new or improved open educational resources that benefit the broader community of learners” (p. 137). Conversely, disposable assignments, seen only by the instructor, often hold little value for students beyond the experience and are frequently disliked by instructors to grade (Jhangiani, 2017). To benefit the wider community of learners and to extend beyond the individual student’s experience, a renewable assessment is not “disposed of,” and instead, the student’s new content is reused (Wiley & Hilton, 2018). Wiley and Hilton (2018) also specify that students should create, revise, or remix a “renewable” assessment. Grounded in constructivist principles, the results of the AI multiple-choice assessment are openly licensed, shared, and offer further value. The AI multiple-choice assignment was designed as a renewable assessment based on constructivist philosophy, benefiting future students and the public. To be renewable, the revised multiple-choice questions are reused in future course materials, such as quizzes, exams, and reusable digital learning objects. According to Churchill (2007), learning objects (LOs) are designed for educational purposes, are digital, and reusable. In Churchill’s (2007) taxonomy of learning objects, the researcher also identifies various typologies of LOs, including practice objects.

Practice learning objects, such as the exam questions discussed in this chapter, allow students to rehearse procedures, drag-and-drop objects, play games, and answer quiz questions (Churchill, 2007). By utilizing practice digital learning objects, learners interact with course concepts and self-test their understanding of new knowledge. Practice quizzes using digital learning objects allow learners to participate in “practicing the retrieval of learned information,” which strengthens “the consolidation of learners’ mental representation and hence long-term retention” (Roelle et al., 2022, p. 142). Thus, inserting newly constructed students’ questions from their completed AI assignments into digital learning objects as practice quizzes will enhance future student exam performance and increase long-term retention of course information.

Assessment Description

This AI multiple-choice revision assessment was infused into a rural community college’s Introduction to Psychology course curriculum. After assessment completion, students should be able to:

- Construct AI prompts to generate scenario-based multiple-choice questions based on course theories and concepts.

- Evaluate AI-constructed multiple-choice questions for accuracy, structure, and bias.

- Apply theoretical perspectives to hypothetical scenarios when revising AI responses.

- Apply accurate representations of DEI principles by revising contextual details and language in questions.

- Justify the accuracy of the AI multiple-choice questions utilizing critical thinking skills and peer-reviewed course materials.

- Write logical arguments to support the ranking of incorrect answers.

Before or in conjunction with discussing this assignment with students, instructors should provide direct instruction on bias in AI and inclusive language. Instructors should furnish students with examples of biased and corrective language to confirm learners can identify AI language that needs revision. A student resource for identifying and revising biased language is the American Psychological Association’s Inclusive Language Guide. The guide describes inclusive language and terminology related to equity and power, person-first and identity-first language, and microaggressions (American Psychological Association, 2023). Additionally, the guide provides students with practical explanations and corrective examples in useful charts for quick reference.

After direct instruction on inclusive language, instructors should demonstrate where to find AI models and how to construct effective AI prompts. Since ChatGPT and Google’s Gemini are generative LLMs designed for general public use and offer basic services for free, students can use these models. I prefer to use Google’s Gemini because many students already have existing Google accounts, and Google provides accessible tutorials.[1]

When students begin the assessment, their first step is to write a prompt asking the LLMs to generate scenario-based multiple-choice questions. Learners then take a screenshot of the AI-generated response to provide the instructor with a copy of the student’s prompt and question before learners revise it.[2] Students should be advised to include in their prompt the educational level of the audience for which the question is being designed (undergraduate university student, graduate student, etc.) and the course subject, theory, or course concept on which the question is being written.

Next, students use peer-reviewed course materials, such as textbooks, instructor-generated course slides, or journal articles, to check and justify the accuracy of the correct answers to the LLM-generated questions. Students then write a paragraph rationale to explain why the answer is correct or incorrect and identify the course materials they used to locate evidence to prove the accuracy of the AI’s answers. After confirming the accuracy of the correct answer, students review the remaining answer choices, rank them from most to least plausible, and then defend and explain their rankings (Harvard, 2020), justifying their answers using course materials. Students must supply a page and paragraph number from the course textbook or the PowerPoint slide number to verify and provide evidence of their correct and incorrect answer rationales.

In the final step of the assessment, students revise the AI-generated questions to portray lived experience, eliminate bias, and increase diversity by adding contextual details to the scenario. For instance, students add diverse biographical information, such as names, ages, and locations, humanizing the question’s context. The question scenarios should be no longer than 4 sentences, and the details should remain significant to the question so as not to distract from the correct answer. Likewise, details should not distract or mislead the reader from answering the question successfully. An example set of assignment directions and grading rubric for students is provided in Appendix A. Then, Appendix B contains a sample of a completed student assignment that I shared with the students.

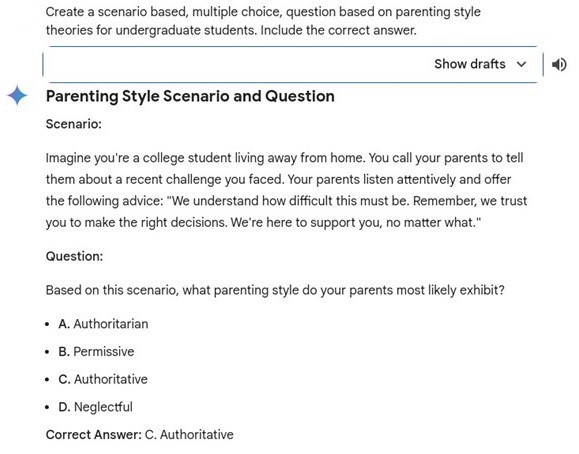

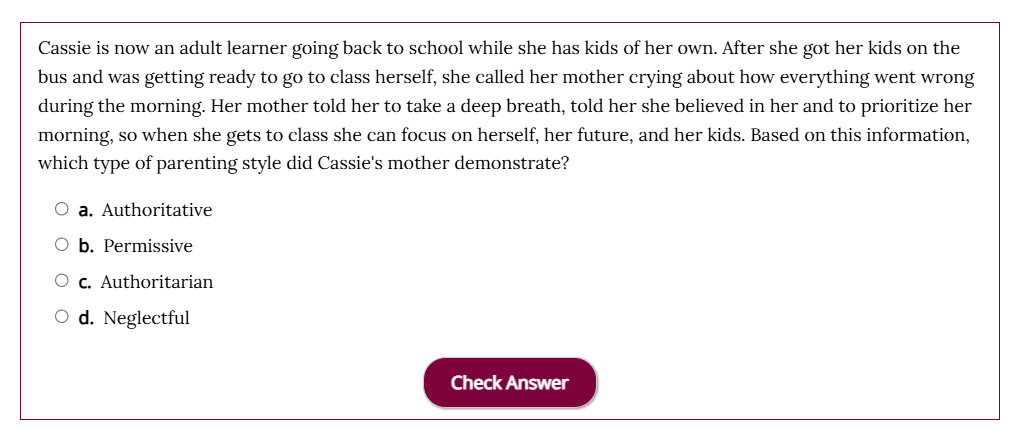

When students revise their questions, they should use inclusive language and construct a scenario portraying themselves. For example, Mid-State Technical College student Cassie Hucke exemplifies how her role as a non-generational student mother contributed to integrating an intergenerational perspective into her revised question. Figure 1 is an example of the AI-generated question before the student’s revision.

Figure 2 presents Cassie Hucke’s depiction of herself as a mother within the context of her revised question.

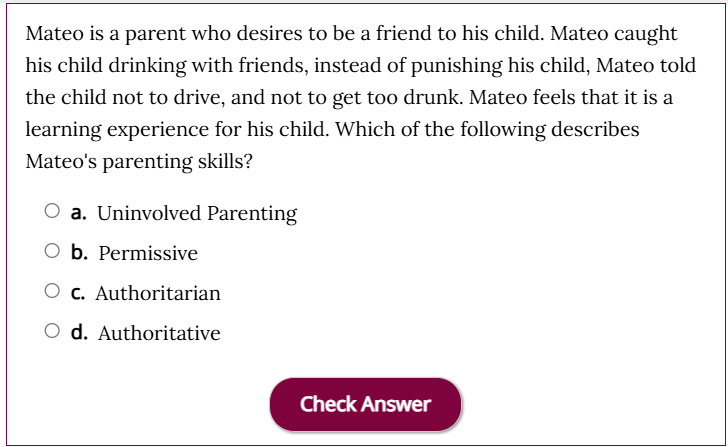

Another student example, Kyle Koran, revised his question to include a cultural reference to the underage drinking problem in parts of Wisconsin. His question is revealed in Figure 3.

When students submit their completed assessment documents, they are also asked to submit a short Google Form to record their preferences regarding repurposing their newly revised questions. The survey asks students if they are willing to share their newly constructed questions with future students as an openly licensed digital learning object and if they would like their names to be included below the question. Students can share their work anonymously, with their names attached, or not at all. A sample of the survey questions is provided in Appendix C.

After students submit their work, I identify and compile exemplary questions for incorporation into future quizzes, exams, and digital learning objectives. I only chose questions that students identified as sharable in their survey responses. A student survey I conducted revealed that students overwhelmingly agreed to share their work with future students: 11 students indicated they were excited to contribute to future learning, 14 indicated they were satisfied to be a part of the process, six were neutral, and zero indicated they were hesitant to share their work or were not interested in sharing. These results suggest that most students were willing to share their work with others. Yet, surprisingly, most students wished to remain anonymous if their questions were shared online, with 25 students indicating they wanted to remain anonymous and only six indicating they wanted their names associated with their work.

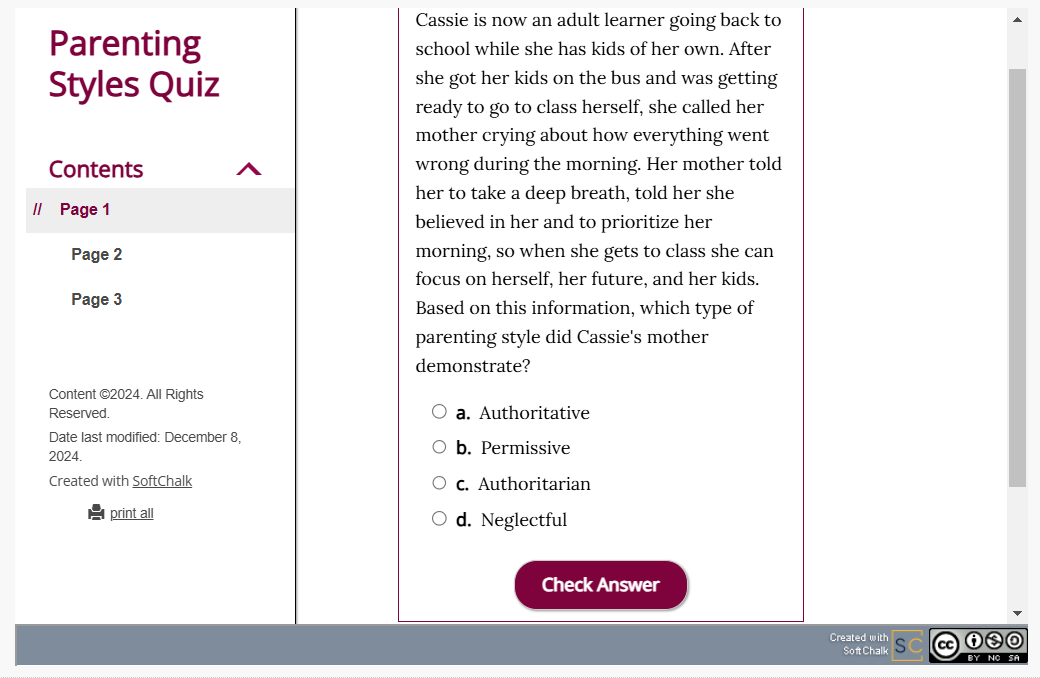

Considering students’ assessment results, I constructed digital learning object quizzes to share with future students by embedding them in my institution’s learning management system. For instance, I used Softchalk to license students’ work using Creative Commons. Figure 5 contains an image of a Softchalk practice quiz I created from student questions. The Creative Commons license is visible in the bottom right-hand corner of the image.

This assessment ends with discussing students’ appraisals of bias and inclusive language in AI models. For a list of discussion questions, refer to Appendix D.

Debrief

I have utilized the AI multiple-choice assessment in two courses and intend to keep it a staple assessment in my curriculum. Students did a proficient job producing creative and unique questions representing our community. For instance, students noted the AI model’s lack of the word “they/their” in question scenarios. Instead, AI uses specific male or female pronouns. Students also recalled the generative AI’s lack of variety in name choices, such as Gemini’s frequent use of Sarah, Emily, Sam, and Maya. The assessment, which increased student learning, exposed students to AI tools and inclusive principles, and added motivational value as a renewable assessment, urged students to demonstrate robust critical thinking skills.

Student survey results about the assessment were positive. Of the 31 student responses, only one indicated dissatisfaction with the AI-powered multiple-choice assessment concerning their learning. The remaining students reported being “very satisfied,” “satisfied,” or “neutral.” This student sentiment aligns with the findings of Clinton-Lisell’s (2021) meta-analysis that found students “generally perceived open pedagogy as positive and meaningful experiences” (p. 260). In another study by Clinton-Lisell and Gwozdz (2023), they further discovered that “students reported higher levels of intrinsic motivation (inherent interest and enjoyment) with renewable assignments than traditional assignments” (p. 131). Overall, students in my courses agreed with previous students and had a worthwhile, satisfying learning experience.

Even the most positive learning experiences come with some challenges, and executing this assessment also did. The assessment was initially designed for an upper-level Educational Psychology course but was later adapted for a lower-level Introduction to Psychology course. A key observation from the adaptation was that students in the introductory course showed less attention to assignment details than their upper-level counterparts. For example, some students only submitted one question instead of three. This could be due to the differences in student populations—Educational Psychology students are typically more focused, while Introduction to Psychology students come from various academic backgrounds and abilities.

Another assumption I made in the introductory course was that students would generate another question if the AI generated one didn’t apply to our course content or was not a scenario-based question. Students did not take this initiative. To avoid future confusion, I updated the student directions (see Appendix A) to explain to students that they can generate multiple questions to evaluate and will emphasize the importance of generating scenario-based questions for revision.

When reflecting on the management of this project, I uncovered technological and time management challenges. The time to grade this assessment was manageable, and the grading rubric (see Appendix A) was efficient. While this assessment is not labor intensive to deploy or grade, collecting and selecting quality multiple-choice questions can be time consuming. This is a challenge reported by many instructors when constructing and deploying open pedagogical projects (Lazzara et al., 2024). In addition, I discovered that downloading PDF documents from the learning management system could be tedious, time consuming, and didn’t provide a smooth transition to an exam question repository. I found that Google and Microsoft Forms survey tools allow respondents to upload documents and images while completing surveys. These tools could streamline student responses, integrate nuanced directions in real-time for students, and save time by pooling questions into one column in an Excel document.

Appraisal

While this assessment presented in this chapter is designed for introductory course students at a university or college, this summative assessment can be modified to enhance a course focusing on quality assessment construction, such as courses titled: Tests and Measurements, Educational Assessment and Measurement, Curriculum Design and Development, and Educational Psychology. The assessment directions and rubric specifications could be modified to focus more on effective question stem construction, and students could identify, write, and justify question distractors.

One of the limitations of this assessment is that students could use AI to write some of their ranking rationales. I have found that when asking AI to provide a ranking explanation of the incorrect answers to a multiple-choice question, the AI frequently lumps some of the incorrect answers together, limiting students’ abilities to use LLMs to circumvent constructing their original rationales. Requiring students to use peer-reviewed course materials to write their explanations increases the likelihood and accountability of students utilizing their critical thinking to write their justifications.

Another limitation of this assessment is that it only applies to the generation of multiple-choice style questions due to the ranking of incorrect questions. Nevertheless, students could generate and revise short case studies using diverse perspectives and self-representation that could be used for extensive essay exam questions. When doing so, however, the cognitive benefits outlined in this chapter may differ.

Summary

This chapter explored a novel approach to incorporating generative AI into higher education assessment. By leveraging the capabilities of large language models, learners were tasked with creating and refining multiple-choice questions, cultivating critical thinking, and promoting diversity, equity, and inclusion. This chapter demonstrates that traditional assessment practices can be enhanced through AI integration, leading to more engaging and effective learning experiences. This assessment challenged students to evaluate and improve AI-generated content and empowered them to contribute to a growing repository of open educational resources.

The integration of AI into the classroom presents both opportunities and challenges. While AI can automate certain tasks and generate content, maintaining a human-centered approach to education is essential to ensure students use AI as a tool rather than a replacement for human ingenuity and critical thinking. As AI technology evolves, educators must adapt their pedagogical practices accordingly. By embracing innovative assessment strategies like the one presented in this chapter, instructors in higher education can prepare students for the challenges and opportunities of the 21st century.

References

American Psychological Association. (2023). Inclusive language guide (2nd ed.). https://www.apa.org/about/apa/ equity-diversity-inclusion/language-guidelines.pdf

Bowen, J. & Watson C. (2024). Teaching with AI: A practical guide to a new era of human learning. John Hopkins Press.

Churchill, D. (2007). Towards a useful classification of learning objects. Educational Technology Research and Development, 55(5), 479–497. https://doi.org/10.1007/s11423-006-9000-y

Clinton-Lisell, V. (2021). Open pedagogy: A systematic review of empirical findings. Journal of Learning of Learning for Development, 8(2), 255-268. https://files.eric.ed.gov/fulltext/EJ1314199.pdf

Clinton-Lisell, V. & Gwozdz, L. (2023). Understanding student experiences of renewable and traditional assignments. College Teaching, 71(2), 125-134. https://doi.org/10.1080/87567555.2023.2179591

Harvard, B. (n.d.). Ranking multiple-choice answers to increase cognition. The Effortful Educator. https://theeffortfuleducator.com/2020/04/27/rmcatic/

Herder, L. (2023). AI: A brilliant but biased tool for education. Diverse Issues in Higher Education, 40(11), 20-22. https://research.ebsco.com/linkprocessor/plink?id=add7ade8-0d50-3f0f-b875-70719e4539b6

Jhangiani, R. (2017). Ditching the “Disposable assignment” in favor of open pedagogy.In W. Altman & L. Stein (Eds.), Essays from Excellence in Teaching. https://doi.org/10.31219/osf.io/g4kfx

Lazzara, J., Bloom, M., & Clinton-Lisell, V. (2024). Renewable assignments: Comparing faculty and student perceptions. Open Praxis, 16(4), pp. 514–525. https://doi.org/10.55982/openpraxis.16.4.706

Leffer, L. (2023, October 26). Humans absorb bias from AI—and keep it after they stop using the algorithm. Scientific American. https://www.scientificamerican.com/article/humans-absorb-bias-from-ai-and-keep-it-after-they-stop-using-the-algorithm/

Moshman, D. (1982). Exogenous, endogenous, and dialectical constructivism. Developmental Review, 2(4), 371-384. https://doi.org/10.1016/0273-2297(82)90019-3

Ng, D., Leung, J., Chu, S., & Qiao, M. (2021). Conceptualizing AI literacy: An exploratory review. Computers and Education: Artificial Intelligence, 2, 1-11. https://doi.org/10.1016/j.caeai.2021.100041

Roelle, J., Schweppe, J., Endres, T., Lachner, A., von Aufschnaiter, C., Renkl, A., Eitel, A., Leutner, D., Rummer, R., Scheiter, K., & Vorholzer, A. (2022). Combining retrieval practice and generative learning in educational contexts: Promises and challenges. Open Science in Psychology, 54(4), 142–150. https://doi.org/10.1026/0049-8637/a000261

Schunk, D. (2020). Learning theories: An educational perspective (8th ed.). Pearson.

Simpson, T. L. (2002). Dare I oppose constructivist theory? The Educational Forum, 66(4), 347–354. https://doi.org/10.1080/00131720208984854

Walter, Y. (2024). Embracing the future of artificial intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. International Journal of Educational Technology in Higher Education, 21, 1-29. https://doi.org/10.1186/s41239-024-00448-3

Wiley, D., & Hilton III, J. L. (2018). Defining OER-Enabled Pedagogy. The International Review of Research in Open and Distributed Learning, 19(4), 133-147.https://doi.org/10.19173/irrodl.v19i4.3601

Xia, Q., Weng, X., Ouyang, F., Lin, T., & Chiu, T. (2024). A scoping review on how generative artificial intelligence transforms assessment in higher education. International Journal of Educational Technology in Higher Education, 21(40), 1-22. https://doi.org/10.1186/s41239-024-00468-z

Zeide, E. (2019). Artificial intelligence in higher education: Applications, promise and perils, and ethical questions. Educase Review. https://er.educause.edu/articles/2019/8/artificial-intelligence-in-higher-education-applications-promise-and-perils-and-ethical-questions

- AI tutorials include: Google’s Gemini, FAQs, and how to write effective prompts. ↵

- For lower-level course students, I would also suggest providing directions on how to take screenshots. Tutorials for taking screenshots using a PC, a Google Chromebook, or a Mac computer. ↵